|

I’m a fourth year PhD student in the Department of Computer Science at Shanghai Jiao Tong University. Starting from 2019, I have been in ANL Lab under the supervision of Prof. Fan Wu and Zhenzhe Zheng . Prior to that, I received my bachelor degree at Shandong University. My research interests include Network Economics and Online Advertising Currently, I am focusing on intelligent decision-making under the uncertain environments. I am also interested in Computer Vision in my spare time. Email / Google Scholar / GitHub / Twitter / Resumé (PDF) |

|

|

|

|

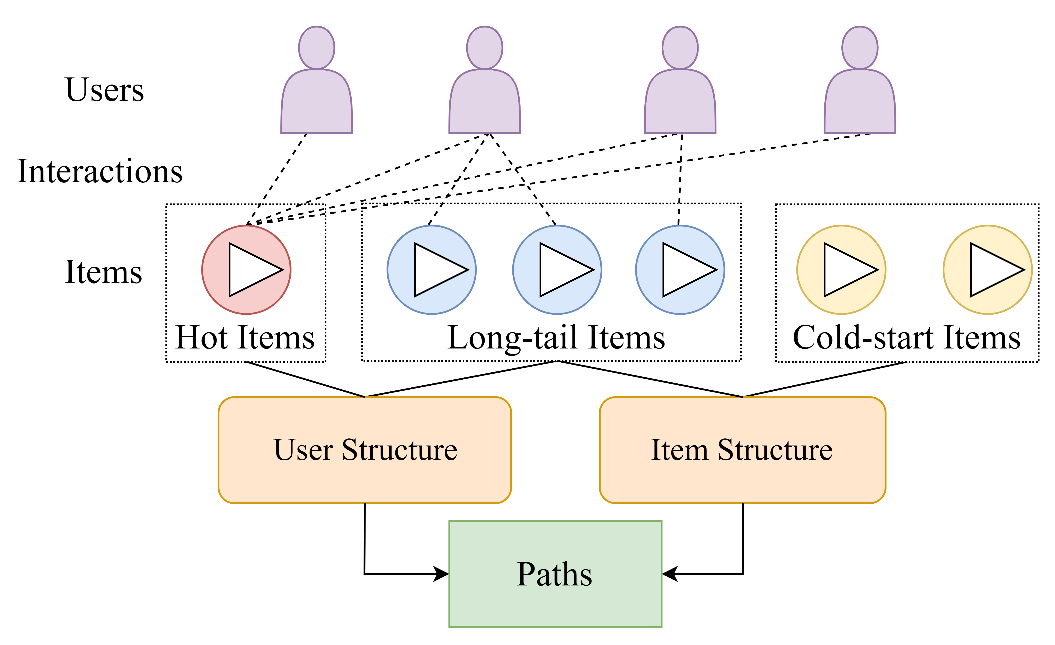

Zhen Gong, Xin Wu, Lei Chen, Zhenzhe Zheng, Shengjie Wang, Anran Xu, Chong Wang, Fan Wu, RecSys, 2023 Paper (coming soon) In this work, we tackle the problem of cold-start and long-tail item recommendation for end-to-end retrieval models for the first time. The proposed FIDR model has two joint end-to-end structures: User Structure and Item Structure. To our best knowledge, FIDR is the first to solve the cold-start and long-tail recommendation problem for the end-to-end retrieval models. |

|

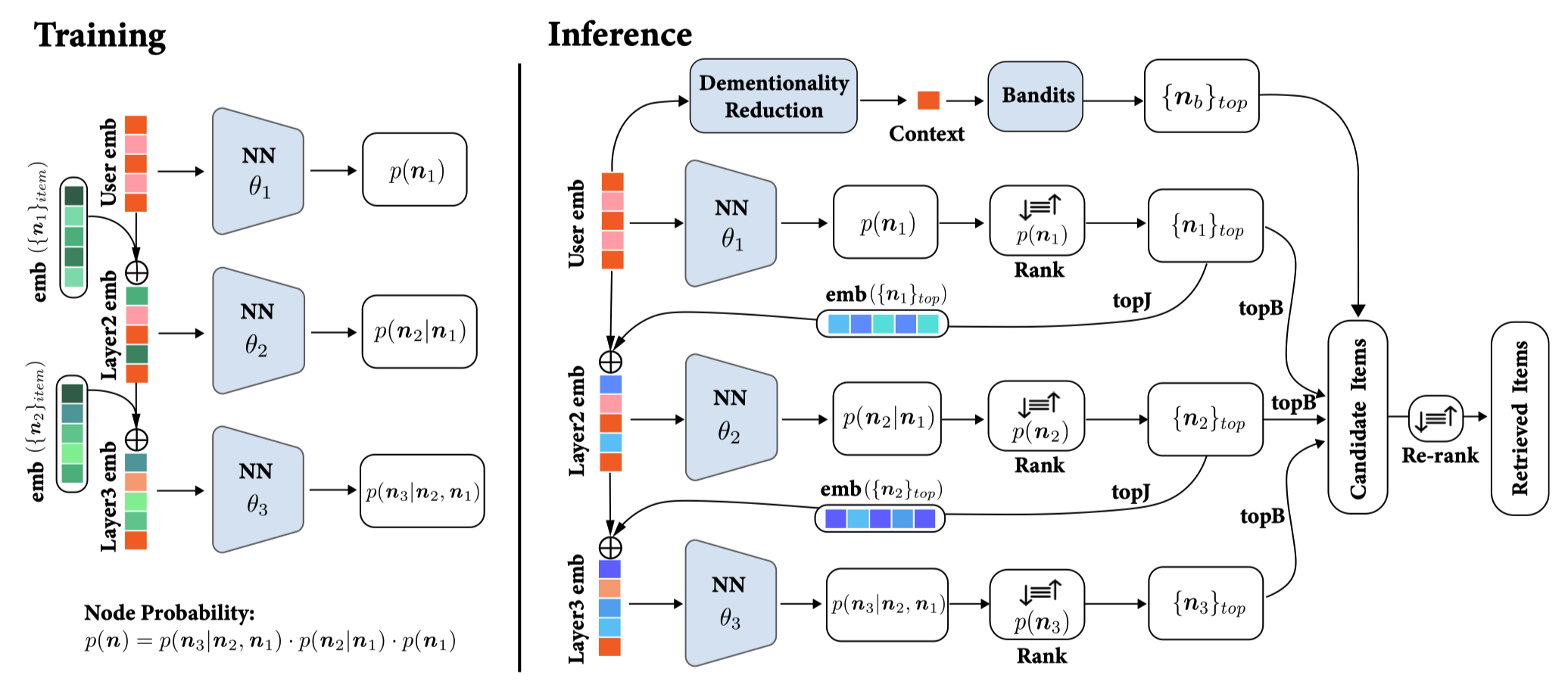

Anran Xu, Shuo Yang, Shuai Li, Zhenzhe Zheng, LingLing Yao, Fan Wu, Guihai Chen, Jie Jiang DASFAA, 2023 Paper (via springer) To address long-tail phenomenon in recommendation systems, we propose a Hierarchical Tree-based model with variable-length layers (HIT) for recommendation systems. HIT consists of a hierarchical tree index structure and a user preference prediction model. It can fully exploit all the training data by dynamically adjusting the lengths of layers in its tree index structure, which can effectively alleviate the long-tail problem. |

|

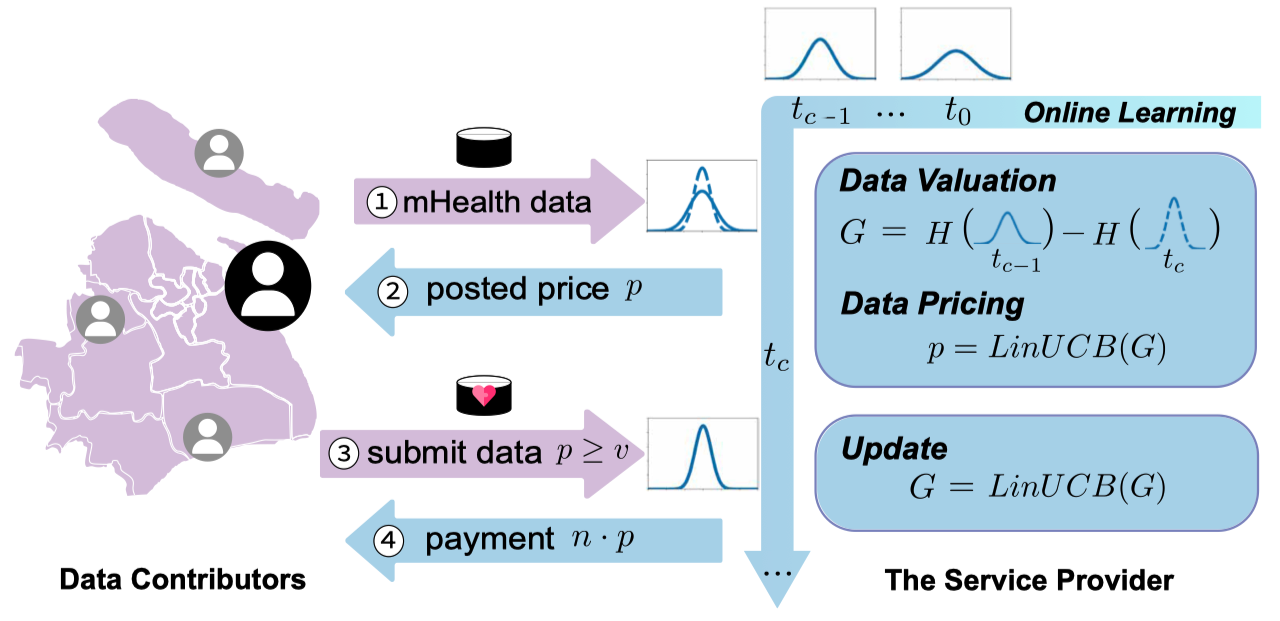

Anran Xu, Zhenzhe Zheng, Fan Wu, Guihai Chen INFOCOM, 2022 Paper / Slides In this paper, we present the first online data Valuation And Pricing mechanism, namely VAP, to incentive users to contribute mHealth data for machine learning (ML) tasks in mHealth systems. Evaluation results show that VAP outperforms the state-of-the-art valuation and pricing mechanisms in terms of online calculation and extracted profit. |

|

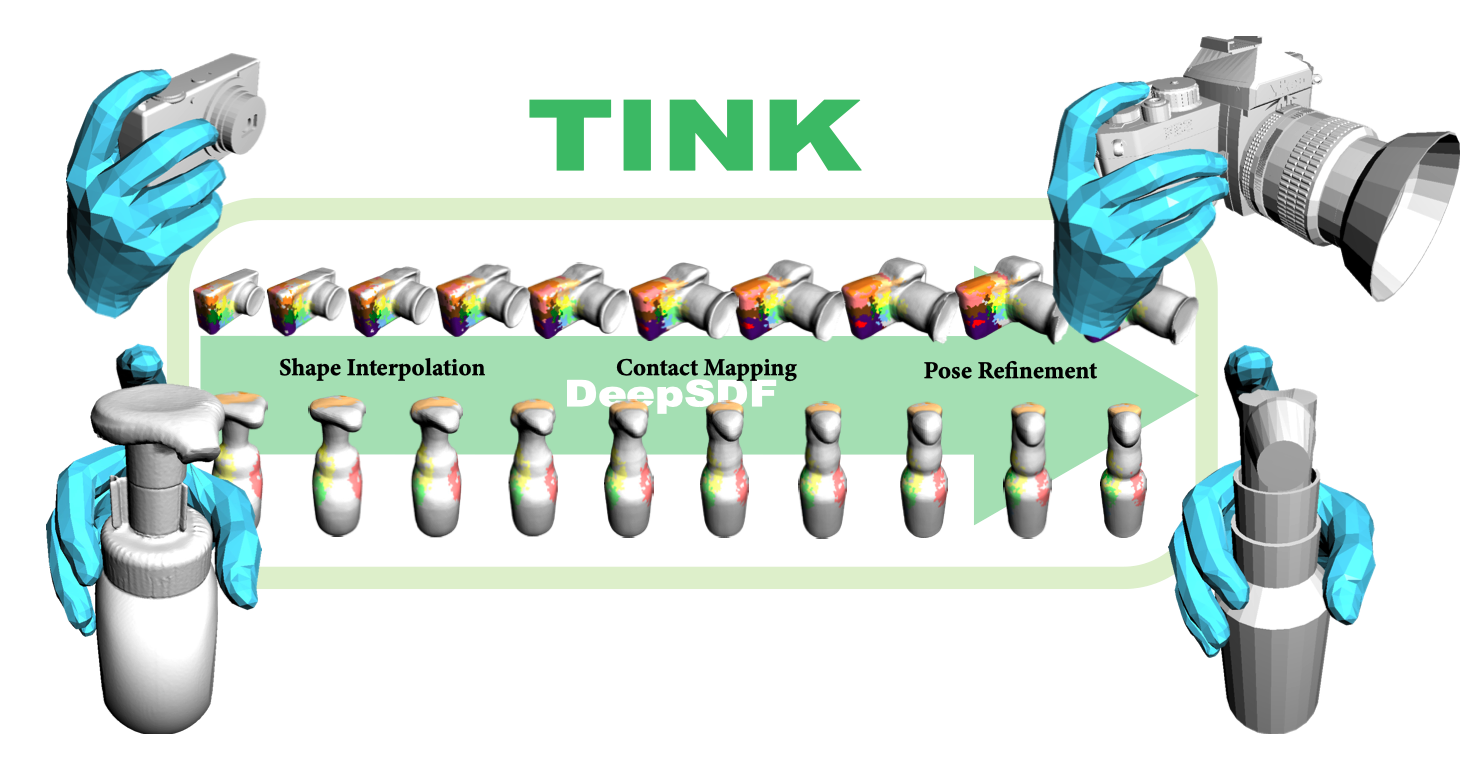

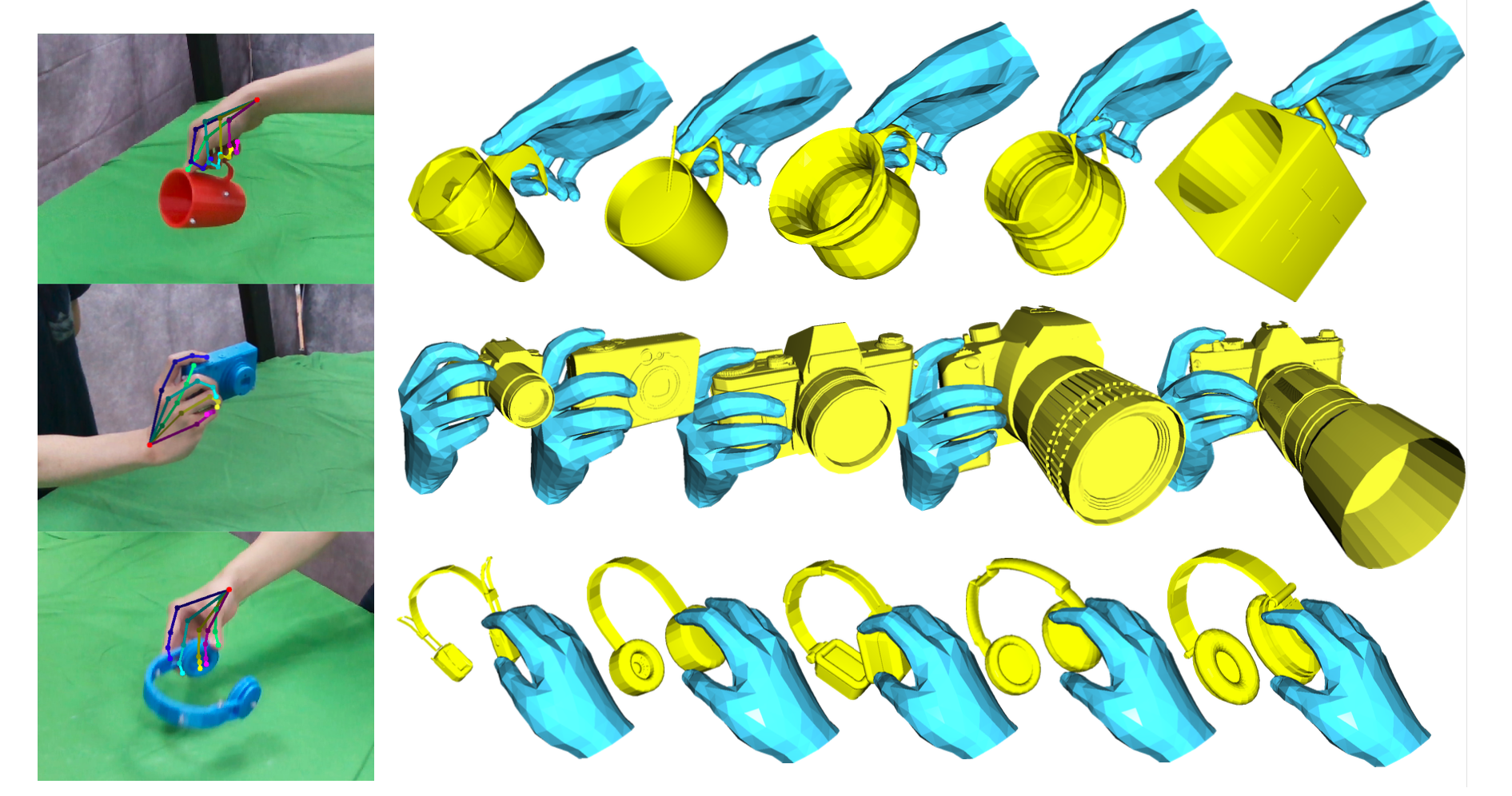

Lixin Yang, Kailin Li, Xinyu Zhan, Fei Wu, Anran Xu, Liu Liu, Cewu Lu CVPR, 2022 Paper / Dataset / Code Learning how humans manipulate objects requires machines to acquire knowledge from two perspectives: one for understanding object affordances and the other for learning human’s interactions based on the affordances. In this work, we propose a multi-modal and rich-annotated knowledge repository, OakInk, for visual and cognitive understanding of hand-object interactions. Check our website for more details. |